Introducing automated testing to small tech teams

Published by

Cristiano Chiaramelli

Published by

Cristiano Chiaramelli

Published at

Categorias:

Introducing automated testing to a small team that is not yet familiar with the process can be challenging for several reasons. This article presents my opinion regarding such challenges, and how I believe it is efficient to approach the adoption of this process given a specific context: a team developing a general purpose web application, having no previous experience with automated tests.

Why are automated tests a good idea?

A common scenario for a small team is to be working on an early stage product that is still looking for the right value proposition and features to develop, or even an older system but still in a low budget scenario. The project scope changes frequently, and feedbacks collected from customers can change backlog priorities overnight. Quality assurance processes are usually limited to numerous manual testing routines, before and after releases, to ensure that after changes the system will still behave as expected.

As the product grows, the technological solution starts to become harder to maintain (often changes to one feature break another) and the usual manual tests become more difficult, boring and prone to failure. In addition, new customers - often more demanding in quality than the early adopters - starts to use the product, increasing the number of bugs found and the expectations for a higher quality system. In this scenario it's clear that increasing man hours on manual testing before releases simply won't scale.

The use of automated tests processes provide the software development team a consistent and repeatable method of testing the functionality of the application. This will improve product quality by ensuring that a) new code is functioning as intended and b) changes do not break existing features.

Contextualization about automated tests

It's important to note that placing an automated testing environment goes beyond writing test scripts, and here I'd like to highlight a few factors:

- Tests need to be effective in detecting defects and bugs, avoiding false negatives; a test script that doesn't test anything relevant will always succeed.

- Tests need to be repeatable, to ensure consistent results and ease of use in different stages of the development process; tests that rely on external factors such as local system time or third-party API responses will lead to non-deterministic results.

- Tests need to be efficient, run quickly, and should not lock up developers' workspaces. This is especially important to encourage adoption and regular use of automated testing processes by the team.

While a thorough examination of each bullet point would be valuable, here are some practical steps to take for improvement:

- To increase testing effectiveness, the team should learn the principles and best practices of automated testing, and I suggest adopting code review for production and testing code.

- To ensure test repeatability, the team must be aware of external dependencies and use techniques such as mocking and test doubles to prevent them from interfering with test results in a non-deterministic way.

- To increase testing efficiency, I suggest implementing a Continuous Integration environment. This allows for parallel execution on machines in the cloud, keeping developers' workspaces free, as well as promoting repeatability by standardizing test execution in a centralized environment.

One other important notice is that automated tests can be implemented using different approaches, on different software layers, resulting in different types of coverage, as well as different development and maintenance costs.

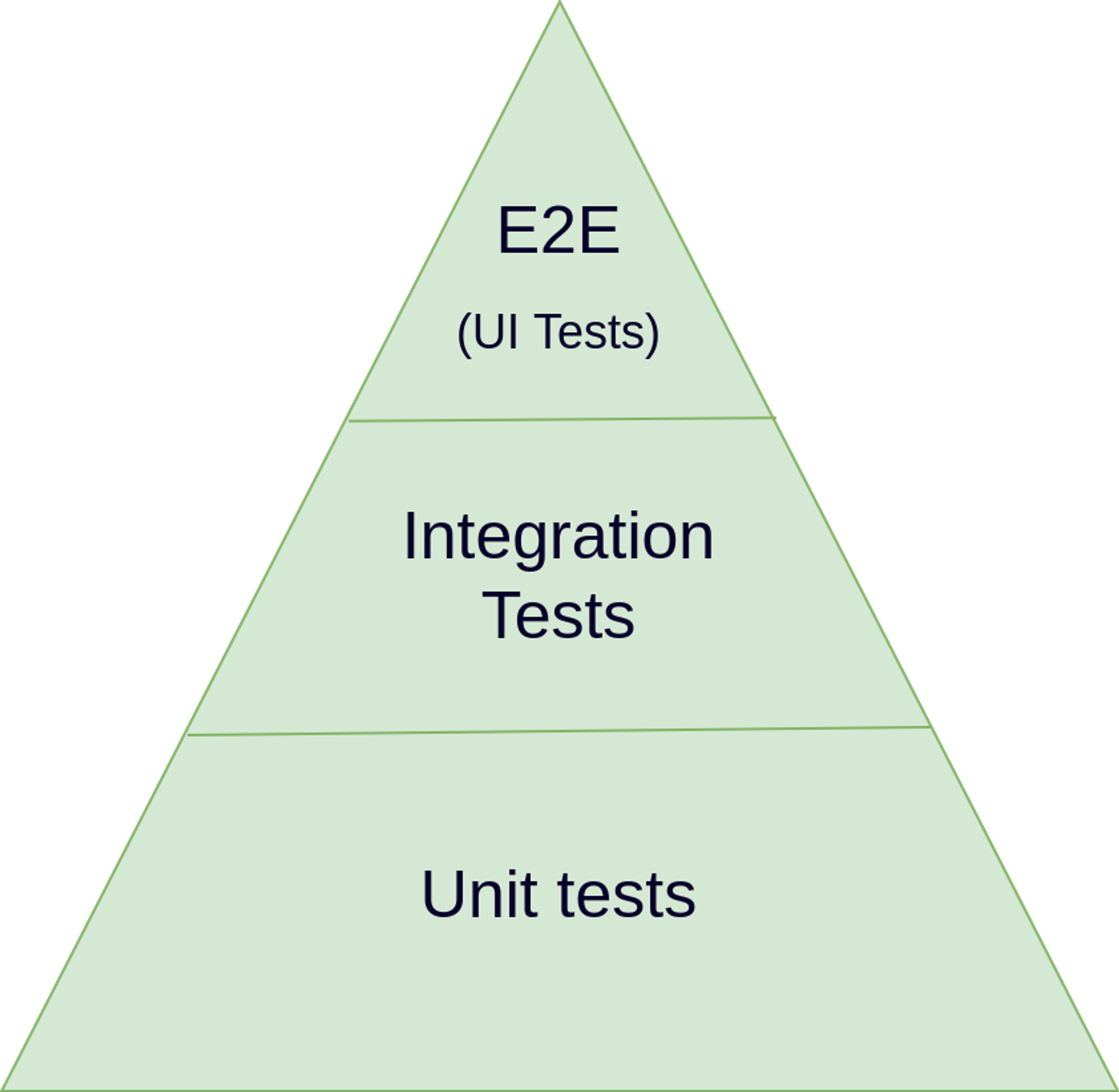

The naming convention for these different approaches varies greatly, but for this article, we'll break it down into the 3 types below:

UI or End-to-End tests

The terminology around End-to-End (E2E) testing can be confusing as the term is sometimes used interchangeably with API acceptance testing. For the purposes of this article, we'll define E2E testing as specifically referring to tests that involve user interfaces (UI).

E2E testing focuses on simulating the user experience by testing the entire system from the user's perspective, all on top of a simulated platform (e.g. a web browser) without knowledge of the internal implementation. It is important to note that E2E tests can be front-end focused only, using mocked API calls, or they can test the entire system by running front-end and back-end services simultaneously.

The biggest concerns regarding E2E tests are:

- High execution time. E2E tests have a long execution time, which can slow down development and also limit the number of tests that can be run.

- Fragility. E2E tests often generate false positives due to external factors and can fail even when the code is working correctly.

Integration tests

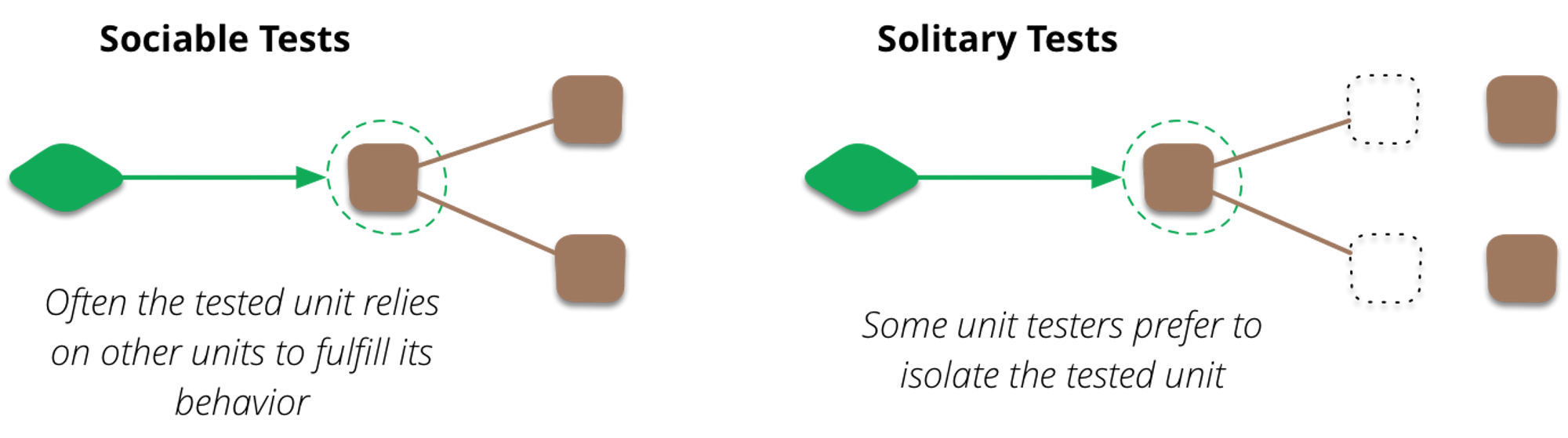

Sometimes Integration tests and Unit tests are lumped together, but for this article, we’ll consider the Sociable vs Solitary tests as the border between Unit and Integration tests, as discussed by Martin Fowler in his UnitTest article.

- Integration tests are sociable tests (those that rely on other units to work properly)

- Unit tests are solitary tests (those that are isolated from other units on testing environment).

That said, integration tests are capable of testing a fair amount of business logic - often from the user's perspective - while still maintaining a moderate runtime. Let's bring some examples, dividing between Back-end and Front-end.

Backend integration tests

For backend development, integration tests are responsible for testing a complete “execution flow”. For RESTful APIs, for example, this means sending a request to an endpoint and asserting that the received response is as expected. In the case of GraphQL APIs, it would be sending a query or mutation to a specific field. The rationale is the same for any type of API.

It is important to note that it is often necessary to mock certain parts of the code, particularly when there are external API calls or database interactions. This is required in order to isolate the system being tested and ensure that test results are deterministic and reliable.

Frontend integration tests

When it comes to frontend development, integration tests also test the complete "execution flow" but with a focus on the state of a DOM-like structure, rather than API requests handling. In summary, frontend integration test tools closely simulate how browsers are used in a real application, but require less configuration, have significantly shorter runtimes, and generate fewer false positives than E2E tests.

A great tool for web frontend integration tests if the Testing Library, which offers an api for several frontend frameworks. It raises a guiding principle that “The more your tests resemble the way your software is used, the more confidence they can give you”, which I strongly agree with. When it comes to practical terms, a Testing Library test would look like this:

test('login flow', async () => {

const container = getDOM()

// Get form elements by their label text.

const emailInput = getByLabelText(container, 'Email')

const passInput = getByLabelText(container, 'Password')

emailInput.value = 'myemail@test.com'

passInput.value = '123456'

// Get elements by their text, just like a real user does.

getByText(container, 'Sign in').click()

// Expects a signed in label to exist.

await waitFor(() =>

expect(queryByTestId(container, 'signed-in-label')).toBeTruthy(),

)

})As you can see, the test script simulates a common behavior of a user in a login form, first typing the email and password and then clicking on the “Sign In” button.

Unit tests

These are tests made to validate small parts of the system. They have great advantages in light of perfomance (running hundreds of them usually takes seconds), and depending on the system's architecture, they are cheap to build and maintain.

The major concern with this approach is that it usually demands that many tests be built to achieve reasonable coverage, and since they take into account how the code is developed, it is important to have knowledge about how modules interact (mainly for mocking). That said, the design of your production code will have a decisive influence on how easy it is to build and maintain unit tests.

Front-end and back-end unit tests (for this article's definition of unit tests) have similar structures and their main goal is to ensure that small pieces of business code work as expected, using a lot of mocks to isolate the tested module.

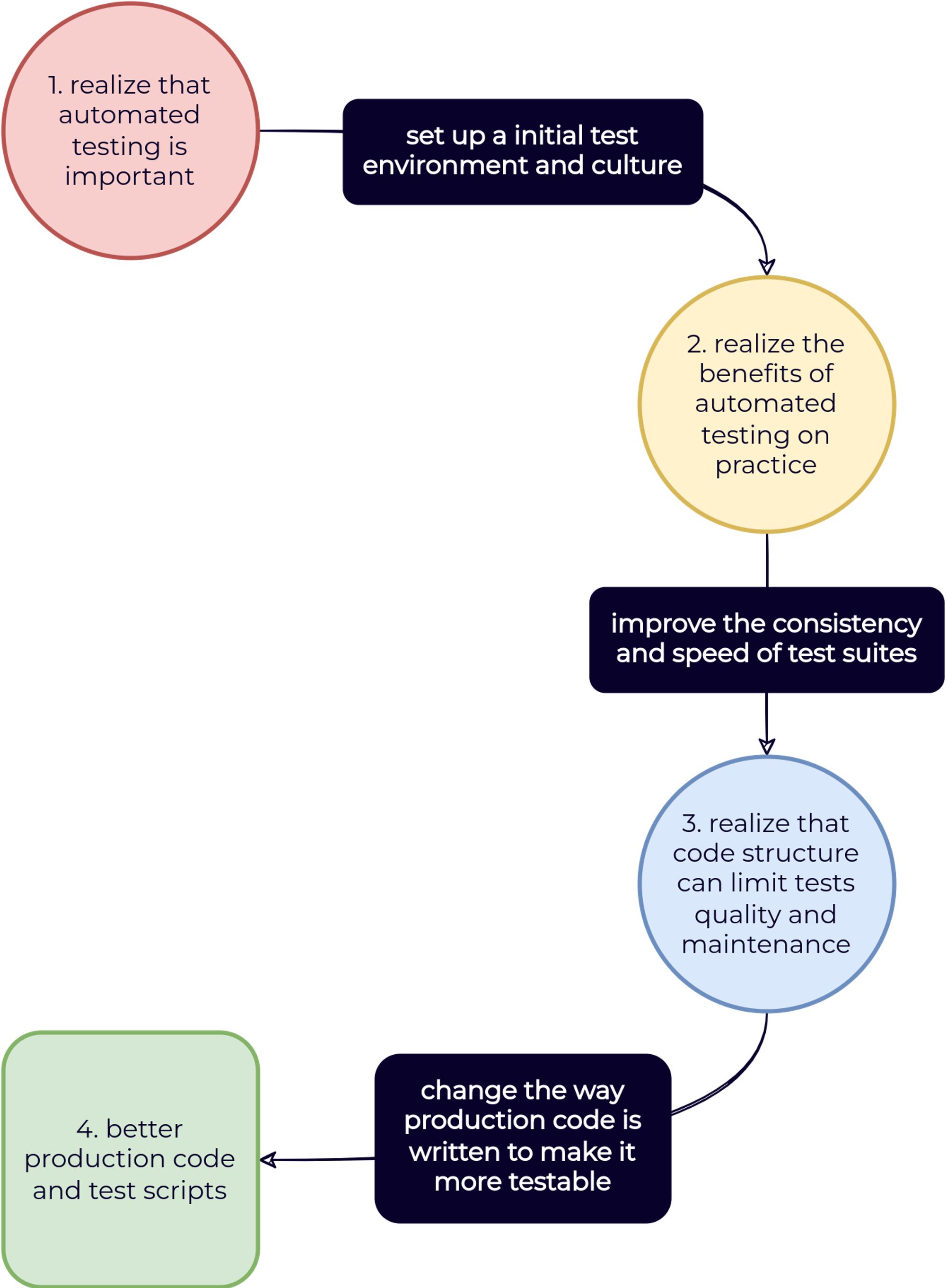

And finally, how to establish a roadmap for deploying an automated testing environment?

After all this introduction about different testing approaches, it’s important to notice that an effective automated testing strategy should use a combination of approaches to maximize their strengths and minimize the cost of development and maintenance. Instead of broadly discussing a one-size-fits-all solution, I will share my personal strategy and reasoning for incorporating automated testing processes within the context previously discussed.

To make it easier for new teams to transition to automated testing, I would recommend focusing on creating tests from the user's perspective, for a couple of reasons. First, these tests don't require a deep understanding of the internal code and are written as a set of user instructions, making them easy to understand for developers and even non-technical staff. Additionally, user-perspective tests are easy to implement on top of existing software with minimal or no changes to production code.

It's important to note that this approach is may include but is not limited to end-to-end tests. As discussed above, there are are various tools available for building integration tests that allow writing scripts from a user perspective while keeping a decent level of decoupling from the underlying code structure, and avoiding the often inconsistent behavior of E2E tests.

In fact, I belive the best approach for the context previously discussed would be to establish an integration testing environment first.

At the end of the day, I believe that a team investing, for example, 100 working hours in constructing integration tests will ultimately result in a more effective and reliable testing environment, in comparison to a team using the same effort to implement end-to-end tests.

At the end of the day, I believe that a team that invests, say, 100 hours of work in building integration tests, will end up with a more effective and reliable test environment, when compared to a team that invests the same effort to implement E2E tests. .

But why integration instead of unit tests?

As previously mentioned, this is an opinion based on the context of a small team with no prior experience in automated testing. Specifically for unit tests, I think that understanding mocking practices and designing the production code in a decoupled way are crucial for the unit tests to be both effective and cost-efficient.

- I consider a unit test suite ineffective if it performs superficial tests that do not guarantee the reliability of the code snippets it is testing. Problems like “a change to one feature breaking another” are an indicator of this.

- I consider a unit test suite to be cost-ineffective if significant changes to the production code are required just to build it, if the test setup is complicated, or if it is necessary to use too complex mocks.

Production code designed without automated testing in mind is likely to exhibit all of these characteristics.

The idea is not to prioritize one type of test over another, not least because the different layers of tests are complementary and important. The point is that, in this author's opinion, the process of adopting automated testing will be smoother if initially the focus is placed on the implementation of integration tests built from the user's perspective. This first phase of adoption can take several weeks (4-16 weeks, I would say), on which the team must focus on improving their test-writing techniques to increase tests consistency and decrease execution time.

As the team becomes more proficient in automated testing, an increased focus on unit testing will be more natural, and even the search for lower execution times and lower false positive rates should lead the team down this path spontaneously. At this moment, what used to be a challenge in the adoption of automated tests will become one of its benefits: the perception that a cleaner code is also a more testable code.

That is, at this time the team will find more reasons to develop less coupled production code so that the process of writing automated tests is more efficient and predictable. In fact, I believe that production code structure is so relevant to building effective unit tests that I'd like to evaluate some development principles from this perspective. Let's then use the famous 5 SOLID principles (intended to make object-oriented projects more flexible and maintainable) from the perspective of automated tests:

- Single Responsibility Principle: This principle states that a class should have only one reason to change. By following this principle, it is easier to test a class in isolation because it has a single responsibility.

- Open-Closed Principle: This principle states that a class should be open for extension but closed for modification. By following this principle, test cases tend to be more durable, as fewer modifications will be required in existing tests when new functionality is added.

- Liskov Substitution Principle: This principle states that objects of a superclass should be able to be replaced with objects of a subclass without affecting the correctness of the application. By following this principle, it becomes easier to write test cases for a class, since it can be easily replaced by a mock or a test double.

- Interface Segregation Principle: This principle states that a class should not be forced to implement interfaces it does not use. By following this principle, test suites tend to be smaller and more valuable, as they only test what is actually relevant to the application, in addition to having fewer dependencies.

- Dependency Inversion Principle: This principle states that high-level modules should not depend on low-level modules, but both should depend on abstractions. By following this principle, it is easier to test a class because its dependencies can be easily replaced by mocks, and the elaboration of these mocks is more obvious, since the abstractions will already be defined.

In summary, SOLID will promote smaller modules that are less prone to change, resulting in simpler and more durable test cases. Furthermore, I would like to point out that the Dependency Inversion principle provides a very practical way to mock module boundaries, which is a remarkable strategy for building reliable and cost-efficient unit tests.

Conclusion

Implementing automated testing processes in technology teams has a significant impact on efficiency and the final quality of work. Of course it will be necessary to learn a new set of tools, both to write and to run the tests, but it is certainly a path that will bring returns in different aspects. And thinking about this “path” of adoption, this article raises the opinion that, if based on automated tests from the user's perspective, that trajectory will be more natural, at the same time that it will raise valuable information about the system’s behavior. After having an automated testing environment (albeit in the process of maturity) set up, the team will be able to improve the production code, increasing the efficiency of writing unit tests that depend on a good structure, as well as resulting in a higher quality and more robust final product (and happier customers 😀).

Extra considerations

- As mentioned, the opinions expressed in this article are focused on the context of web application development. Other platforms may have different requirements, benefits, and limitations.

- In this article we are focusing our arguments on functional automated tests. There is an entirely different set of non-functional tests (eg load testing, penetration testing…) which are kept out of our discussion.

- The success of introducing automated testing depends not only on the team’s maturity, but also on their willingness to adopt new processes and workflows.

- Teams using an agile development approach are likely to benefit from implementing new processes, due to the iterative nature of these methodologies. This certainly extends to the adoption of the automated testing process.

Published by

Cristiano Chiaramelli

Computer scientist and CTO at Liven. Believes in technology as a means to solve problems and generate value.

Computer scientist and CTO at Liven. Believes in technology as a means to solve problems and generate value.